How does IoT challenge our perspective on data ethics?

Funda Ustek-Spilda | April 28, 2019

In ‘What is data ethics?’ (2016 p. 1), Luciano Floridi and Mariarosaria Taddeo define data ethics as:

"...a new branch of ethics that studies and evaluates moral problems related to data (including generation, recording, curation, processing, dissemination, sharing and use), algorithms (including artificial intelligence, artificial agents, machine learning and robots) and corresponding practices (including responsible innovation, programming, hacking and professional codes) in order to formulate and support morally good solutions (e.g. right conducts or right values)"

Luciano Floridi and Mariarosaria Taddeo

According to this conceptualisation, data ethics builds on computer and information ethics but shifts the level of abstraction of ethical inquiries from being information-centric to being data-centric. Floridi and Taddeo note that this shift entails that ethical analyses become concentrated on the content and nature of computational operations, including the interactions among hardware, software and data – rather than the specific digital technologies that enable such interactions. This is why, they argue, data ethics should be developed as macro ethics so that the ethical impact and implications of data science and its applications can be addressed within a holistic and inclusive framework.

I agree with Floridi and Taddeo that the interactions among hardware, software and data should be included in data ethics, but disagree that specific digital technologies that enable such interactions can be omitted from the ethical analyses. More specifically, I note that Internet of Things (IoT) presents important challenges to this focus on data and its interactions, as hardware, software, sensors and networks might all individually or together present important ethical challenges and risks. Consequently, in this blog post, I explore how the boundaries between these technologies often get blurred in IoT and how data ethics approach would be limited to understand and address the potential ethical risks if they ignore the affordances and limitations of the specific digital technologies involved in emerging technologies.

Data Ethics: what data, which ethics?

Data ethics has come to be framed around Big Data ethics in the literature, most notably related to data sharing and usage (Herschel and Miori, 2017), datafication (Mai, 2016), analytics of tracking (O’Leary, 2016; Taylor, 2016; Willis, 2013) and selection bias (Tam and Kim, 2018). In most of these analyses, the focus is on privacy and security of data from a socio-technical perspective (Zwitter, 2014) or data justice (Taylor, 2017). Consequently, the conceptualization of ‘ethics of data’ has focused on “ethical problems posed by the collection and analysis of large datasets and on issues ranging from the use of big data – to profiling, advertising, as well as open data” (Floridi and Taddeo, 2016, p. 2). Key issues have been identified as re-identification of individuals through data mining, linking, merging or re-using of large datasets (Ibid), tracking and surveillance of individuals (Gabriels, 2016; Peacock, 2014; Taylor, 2016b), as well as risks for individual and group privacy or privacy of children, which might lead to bias, discrimination or loss of agency (Floridi and Taddeo, 2016, p. 2; Mai, 2016; Smith and Shade, 2018).

Focusing on data and its interactions with hardware and software, however, might be limiting the kind of ethical questions we ask. While not denying the importance of security and privacy, I argue that we need to widen the scope of the questions we ask when reflecting on emerging technologies, and also evaluate which ethical approaches guide our thinking.

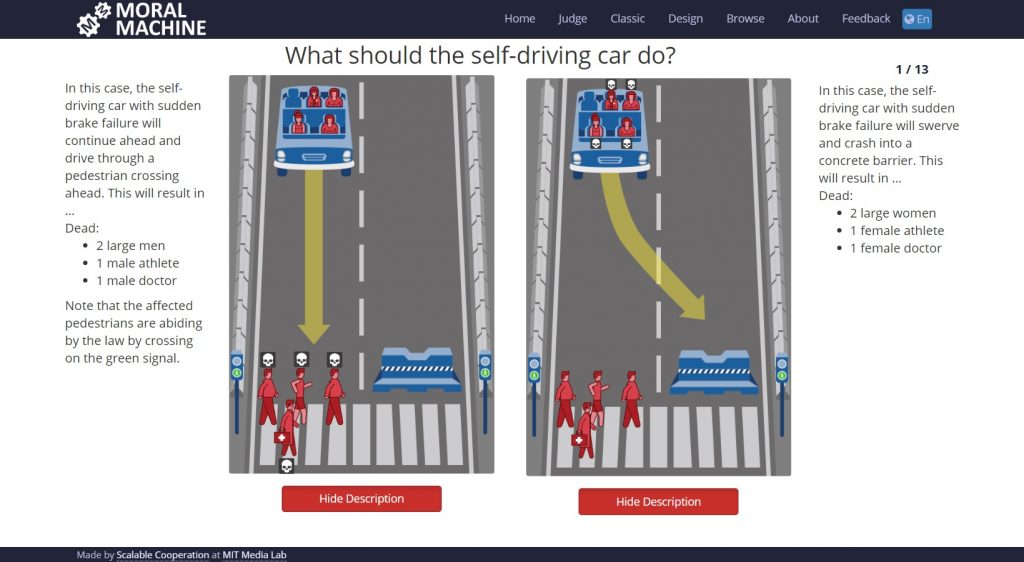

In a previous blogpost published here on our VIRT-EU website1, I had noted that much of ethical thinking in IoT and emerging technologies are shaped by consequentialist ethics, which focuses on the consequences of decisions and actions. For instance, take MIT Lab’s Moral Machin 2, which is “A platform for gathering human perspective on moral decisions made by machine intelligence, such as self-driving cars.” When you “start judging” on their website you browse through scenarios and make decisions on “What should the self-driving car do?” as in the example shown below. Should the self-driving car run over pedestrians who are law-abiding, “large” women and men, executives, doctors, homeless, elderly, children or animals? The logic of ethics in the simulation platform is that one will have to die, and machines (though you are helping them here) need to make a choice who. The consequentialist thinking ignores/overlooks the question of whether somebody needs to die to begin with. It does not question the morality of killing by treating it as inevitable, inescapable and imminent. Thus, it frames the question around whose death is least consequential or who it would be ‘moral’ to kill, given the circumstances.

At the end of the simulation, you get an evaluation of your decisions, which are not meant to ‘judge’ but merely visualize them in comparison to the average choices made by others who played it. They tell you your most saved and killed characters, who you chose to protect, whether you had a gender, age, ‘fitness’ or ‘social value’ (I’d say social status) preference or made decisions based on whether the characters were abiding the law. While the simulation might be useful in revealing unconscious biases, it nevertheless only considers them relevant if they have a bearing on the ‘consequences’ you would like to achieve, given the circumstances of inevitable death.

This ‘inevitability’ in reflecting on ethical issues is something I have also seen in our fieldwork with designers and developers of IoT. Privacy mishaps might happen, security breaches are inevitable as one builds new technologies and cannot see how they might or might not work, I was told. The motto ‘Move fast and break things’ was used over and over again when explaining how ‘disruption’ should be prioritized and that otherwise, ethics could stall innovative thinking. When asked about how they deal with the fact that they do not/can not always see the consequences of their decisions when building new technologies, developers also told me that this was considered to be part of the ‘exciting’ nature of technology, although they rarely considered disastrous consequences happening as a result. I often followed these discussions by asking what would be the most terrible thing to happen, if their technology was adopted by everyone on the planet. This question, however, often yielded answers pertaining to increased efficiency and higher optimization, both not considered disastrous consequences in and of themselves.

In contrast to consequentialist thinking, I have also met with developers and designers who were guided by their own principles and values. Coming from a more deontological ethical perspective, they identified ‘rights’ and ‘wrongs’ in the industry and tried to align the technologies they build with the first rather than the latter. A developer I met in a conference in the Netherlands, for instance, refused to integrate facial recognition software to his products, although it was a demand from their clients. He explained that despite the loss of business, he refused to build technologies just for the sake of building them, without reflecting on what they entail for the society at large. He was critical about facial recognition technologies being incorporated to anything and everything under the radar, “because people think they are cool.”

Between the spectrum of consequentialist and deontological ethics, at VIRT-EU we identified that there is a wide range of approaches developers and designers might take. To reflect on this wide variety and on the contextuality of the decisions they come to make, we have built a multi-layered ethical framework based on Virtue Ethics, Capability Approach and Care ethics. I had explained this approach in my earlier blog post 3, but I would like to briefly recap here:

“Virtue ethics comes from the Aristotelean schools of thought where leading a virtuous life is considered the basis of leading a good life. These virtues, however, are not qualities one is born with but learned and acquired through continuous striving towards being good, and indeed, Aristotle counts the continuous struggle to turn acting in a virtuous way into the habit as a virtue on its own. While virtue ethics mainly focuses on an individual’s process of attempting to live a good life, the capability approach examines the ability to lead a good life, given the existing social contexts individuals are embedded in, which present them with a variety of opportunities, but also constraints. Care ethics brings both the Virtue Ethics and the Capability Approach together in that, it suggests taking into account the shifting obligations and responsibilities of individuals as they are positioned in a web of relations while examining their responsibilities for their decisions. By bringing these approaches together into a coherent framework, we are able to acknowledge that ethics as a process is not exclusively dependent on the principles and actions of the individuals, but acknowledge the inherent dialectic of life where conflicting demands, obligations and structural conditions can both limit and shape even the best intentions.”

Funda Ustek-Spilda

Consequently, what data and which ethics are both very important questions to ask when analyzing emerging technologies. These two questions have the potential to help us reflect on the kind of ethical thinking we are engaged in [when our thinking has been limited by consequentialist approaches] and take notice when the contextual reasons for making decisions are ignored.

Ethics beyond data

Floridi and Taddeo (2016) note that labels such as ‘robo-ethics’ or ‘machine-ethics’ miss the point, because “it is not the hardware that causes ethical problems, it is what the hardware does with the software and the data that represents the source of our new difficulties.” This is why they call ethical problems potentially rising at the level of data collection, curation, analysis and use, rather than at the level of information. I argue that IoT challenges this perspective, as hardware on its own does raise important ethical questions, so does software, platforms, networks; and the myriad ways in which these technologies can be connected. This argument stems from the constructivist insight in Science and Technology Studies (STS) which goes beyond the identification of politics inside labs (here, technology start-ups); as it also pertains to the technologies of the field, its methods and theories and its ways of making worlds (Haraway 1997 in de la Bellacasa, 2011, p. 86; Goodman, 1978).

In this blog post, I am going to develop this argument by focusing on four areas of concern expressed by the developers we met in the field: Sustainability, End of life, Security and Privacy. All of these concerns relate to both technical aspects of IoT, but also the practices developers might choose to exercise in their work.

Sustainability

Sustainability is increasingly a concern expressed by developers. While sometimes this concern rises from the reflection that consumers are more interested in purchasing products that are sustainable [so, with consequentialist thinking that the more sustainable the product, the higher chances of profitability], other times it rises from a genuine concern about the environment and the sustainability of IoT devices.

IoT devices often blend various types of hardware. While some of the hardware might be recyclable on their own, when combined with various types of sensors, cables and connectors, they often become un-recyclable and end up contributing to the growing e-waste. According to ifixit.org, an organization that supports reuse and repair, 60% of e-waste ends up in landfill and even when recycled, 30% of significant electronic material gets lost4. E-waste is packed with toxic chemicals – arsenic, lead and poly-brominated flame retardants and are incredibly costly both to extract as well as recycle; the latter especially because there are not enough recyclers.

Considering that 80% of IoT startups are expected to fail within the first two years of their ventures, for IoT companies that deal with hardware, this high-volume and high-turnout of the industry entail that there is a lot of e-waste that gets produced. This problem has many faces. The first one is most start-ups treat their initial hardware production as a test-case, they do not necessarily invest in the highest quality parts as they would like to just see how or if the prototype works. The second one is that in the start-up world, due to complicated funding systems, sometimes the product gets successful in the investment round but not once it is released to the market. The third is that companies constantly improve on their initial product as they go through different investment rounds, are able to hire designers and UX researchers and also as they get feedback from end-users, which means that older versions of hardware that were built get redundant and a lot of throwing away happens. There may be other reasons too for why unsustainable practices often follow hardware but these three have come up in our fieldwork time and time again.

The reasons cited above, however, are also similar to the reasons why hardware is considered to be very costly for start-ups, and why, many IoT start-ups turn to software for testing their ideas. But software also does not necessarily mean sustainable. They may seem not to be using physical resources or creating physical waste, but a tremendous amount of energy is still necessary to manufacture and power devices, data centers and related infrastructural needs, as a Greenpeace report says.5 Data mining, deep learning, blockchain applications, high-frequency algorithmic trading are all energy-hungry and use enormous computational power and electricity. Although these applications are not limited to the IoT sector, some IoT start-ups also use them. For instance, Provenance, a start-up that is known for its environmental values, and which is concerned with making supply chains transparent- to show the origin, journey and impact of the products we buy everyday 6 uses blockchain. Their blockchain engineer, Thibaut Schaeffer explains this choice by noting that it “opens new horizons for brand transparency”. While it might well be the case for Provenance, several developers I have talked to mentioned the ‘hype’ around certain technologies. Blockchain is certainly one of the hyped technologies they mentioned. Some developers mentioned that start-ups took up blockchain because it looked ‘cool’ to the investors, or they looked ‘much more sophisticated’ if they could do whatever they could do with other software on a blockchain. Expectedly, some of these developers reflected on the environmental footprint of the kind of technologies they used, some of them did not. Most importantly, however, these reflections revealed that both hardware and software raise important questions regarding their environmental implications, even before they are blended with other technologies or even before they become incorporated into ‘data.’

End of Life

“Similarly, it is not a technology that is unethical if it fails or becomes a monster, but rather to stop caring about it, to abandon it as Dr Frankenstein abandoned his creation. Here we can recall Latour’s inspiring ‘scientifiction’ of Aramis (a promising transport system in Paris) where he tells the story of the collective troubles that led to the abandonment of the project (Latour, 1996). This version of caring for technology carries well the double significance of care as an everyday labour of maintenance that is also an ethical obligation: we must take care of things in order to remain responsible for their becomings.”

Maria Puig de la Bellacasa, 2011: 90

In this brief excerpt from de la Bellacasa’s article titled “Matters of care in technoscience”, she turns our attention to the double significance of care: both as an everyday labour of maintenance, but also responsibility for what becomes out of them. These two types of care especially reveal themselves in what is called ‘end of life’ of IoT products. I mentioned above that majority of start-ups fail within the first two years. Also, sometimes, companies do not go under but they are sold or they merge with other smaller or bigger companies. So their products reach their ‘end of life.’ But this does not always mean that their devices/applications stop working once the companies go under, change hands or change forms. Taking a responsibility of the ‘becomings’ of their products is very costly, as several developers mentioned to me in different occasions. Sometimes, it is financially impossible to provide service, repair or any other form of assistance; sometimes it is practically impossible: people move countries, jobs, sectors and so on. In other cases, developers might want to emotionally detach themselves from a previous venture and might no longer want to be associated with it. For end-users, however, this entails that their devices might stop being supported and they might be exposed to important risks or at least, might end up having spent their hard-earned cash on an unusable device/platform.

Take Revolv’s smart home hub. Revolv was a smart home startup that was acquired by Google in 2014. The hub included a range of different IoT devices, from connected lights to coffee pots via a single smartphone app7. Once the company was sold to Google, a Nest spokesperson notified the Revolv customers that the cloud service previously offered to them would be shut down; which meant that Revolv would stop working entirely. For customers who bought into the product with a price tag of $300, this meant that their product stopped working only after 18 months. While for some customers, this might be an ‘OK’ price to pay for trying out new technologies, for others, it might be punishing – not least financially. For instance, what would happen if a person who had a smart lock on her door came back from holiday to find out that she can no longer unlock the door to her home because the smart lock company went under? How about wearables in the health sector? What if a heart chip stops working when the company producing it changes hands and no longer supports the chips already sold?

Ethics of emerging technologies go beyond data ethics because the ethical questions that arise go beyond data. Here, the concern/care for the end of life of an IoT product reveals that the concern is not only about what happens to the data that one company produced before going bankrupt or merging with another company, but that the ‘life’ of the product raises important ethical questions about what and/or who the developers care about, be it the environment, their end-users, investors or others.

Security and Privacy

Security and privacy are the two most frequently mentioned ethical concerns developers have in IoT. So much so that, I have come to wonder whether ethics in IoT is increasingly being equated to them. From smart home devices recording private conversations and sending them to random contacts8 to baby monitors being hacked9, there is a wide range of examples of privacy violations and security breaches of IoT devices. While these security and privacy risks are usually framed around data security and data privacy, in fact, hardware on its own may pose risks for privacy and security – equally its connection to other devices might make an IoT system more vulnerable.

Several developers who work with hardware have informed me that hardware on its own is not neutral. Some hardware are more secure than others, just as some sensors protect the IoT devices against external attacks more than others. Especially with respect to hardware, product of origin was mentioned as a way to compare and contrast the security of the devices. In general, it was explained to me that if sensors were produced in China [or in other Southeast Asian countries], they are expected to be a lot less secure than those produced in the EU, because the EU sets high standards and has compliance mechanisms in place to make sure sensor producers measure up to these standards. This added security, however, often came with a price tag. Sensors produced in the EU were explained to be a lot more expensive than their Chinese counterparts, and given that start-ups might be testing ideas or working on prototypes might not have huge budgets, they might choose the cheaper alternatives, even when they might pose security risks. In other words, the ‘capability’ of the start-ups in affording more expensive sensors to make their products more secure emerges to be a factor in their decision-making.

Similarly, hardware raises questions about security and privacy on its own, based on how it can be made to work with software, sensors and networks. I participated in a focus group a developer organized to discuss and seek feedback on some of her designs for her product: a wearable alarm for violence against women. There were various designs on the table, all working with more or less the same functionality. One of the differentiating aspects of the various designs was which hardware design provides what kind of privacy to the user. Not only data privacy, but also privacy of the fact that she might feel the need of wearing an alarm and how she might like to privately activate the alarm.

Another developer I met who is also working on wearables, told me how different sensor-led textiles can have different security affordances. Some of the hardware that gets built into these wearable technologies afford only limited security due to either the need to have a wider range of network accessibility or the kind of materials that were used to build it, she informed me. She told me in her product she chose more secure hardware because she intended for it to be used in the healthcare industry and healthcare industry had higher security and privacy requirements than other industries. This is why, she said, many of the wearables are marketed for ‘Wellbeing and fitness’, even when they might have an obvious healthcare implication.

Lastly, the connection between IoT devices might expose them to privacy and security risks, which individually the devices might seem to be protected against. Many developers I have spoken to mentioned why interoperability in internet of things remains an ideal, and not necessarily a practice. They told me that it is because one can set up their IoT device as secure and private as possible, but once they are made interoperable with other devices, the risks others are exposed to get spread over the whole network. So, an insecure connected kettle might expose a connected doorbell – which is otherwise secure- to various attacks. It is important to consider here that, the interactions between various operating systems also often remain unknown and it is not easy to foresee what kind of security and privacy gaps might emerge once devices become interoperable.

Hence, privacy and security risks IoT devices pose go well beyond data privacy and data security risks. Not denying the importance of the latter, how hardware, software, sensors and networks raise ethical concerns individually and as part of a system should all be taken into consideration.

From data ethics to the ethics of things

In this blog post, I explored whether ‘data ethics’ approach helps us analyse emerging technologies such as IoT. I argued that IoT presents important challenges to the focus on data and its interactions in data ethics, because hardware, software, sensors and networks might all individually or together present ethical challenges and risks. I looked at Sustainability, End of life, Security and Privacy as ethical concerns arising from IoT and explored what these ethical challenges are. I argued that we need to widen our understanding of data ethics – go beyond data ethics – because ‘things’ also raise ethical risks.

Although data ethics is hugely important, focusing on data only might limit the kind of questions we ask about emerging technologies. For instance, we can ask about how data breaches arise, and how data practices could be improved. But one of the key questions we can ask is also related to the possibilities of technologies: Could this [technology/device/software/algorithm] be made otherwise? This question requires us to go beyond what kind of data that gets produced with a particular technology, and consequentialist thinking about its implications. Instead, it urges us to consider what it means to build these technologies, who will be using them and what for. Consequently, it moves our thinking to take into consideration what the values might be that led to a particular technology, what/who their developers cared for and what their capabilities were in building it.

A version of this paper was presented at Uppsala University, Information Technology Centre on 9 April 2019. I thank Dr Matteo Magnani and Dr Davide Vega d’Aurelio for their invitation and all participants for their useful comments and questions

References

de la Bellacasa, M. P. (2011). Matters of care in technoscience: Assembling neglected things. Social Studies of Science, 41(1), 85–106. https://doi.org/10.1177/0306312710380301

Floridi, L., & Taddeo, M. (2016). What is Data Ethics? (SSRN Scholarly Paper No. ID 2907744). Retrieved from Social Science Research Network website: https://papers.ssrn.com/abstract=2907744

Gabriels, K. (2016). ‘I keep a close watch on this child of mine’: A moral critique of other-tracking apps. Ethics and Information Technology, 18(3), 175–184. https://doi.org/10.1007/s10676-016-9405-1

Goodman, N. (1978). Ways of Worldmaking. Indianapolis, Ind: Hackett Publishing Company, Inc.

Herschel, R., & Miori, V. M. (2017). Ethics & Big Data. Technology in Society, 49, 31–36. https://doi.org/10.1016/j.techsoc.2017.03.003

Mai, J.-E. (2016). Big data privacy: The datafication of personal information. The Information Society, 32(3), 192–199. https://doi.org/10.1080/01972243.2016.1153010

O’Leary, D. E. (2016). Ethics for Big Data and Analytics. IEEE Intelligent Systems, 31(4), 81–84. https://doi.org/10.1109/MIS.2016.70

Peacock, S. E. (2014). How web tracking changes user agency in the age of Big Data: The used user. Big Data & Society, 1(2), 2053951714564228. https://doi.org/10.1177/2053951714564228

Tam, S.-M., & Kim, J.-K. (2018). Big Data ethics and selection-bias: An official statistician’s perspective. Statistical Journal of the IAOS, 34(4), 577–588. https://doi.org/10.3233/SJI-170395

Taylor, L. (2016). No place to hide? The ethics and analytics of tracking mobility using mobile phone data. Environment and Planning D: Society and Space, 34(2), 319–336. https://doi.org/10.1177/0263775815608851

Taylor, L. (2017). What is data justice? The case for connecting digital rights and freedoms globally. Big Data & Society, 4(2), 2053951717736335. https://doi.org/10.1177/2053951717736335

Willis, J. E. I. (2013). Ethics, Big Data, and Analytics: A Model for Application. Purdue: Purdue University.

Zwitter, A. (2014). Big Data ethics. Big Data & Society, 1(2), 2053951714559253. https://doi.org/10.1177/2053951714559253

Ustek-Spilda F (2018) A Conceptual Framework for Studying Internet of Things

http://moralmachine.mit.edu/accessed 26 April 2019

Ustek-Spilda F (2018) A Conceptual Framework for Studying Internet of Things

https://ifixit.org/ewaste Accessed 26 April 2019

Greenpeace. 2017. Clicking Clean: Who is winning the race to build a green internet? https://storage.googleapis.com/planet4-international-stateless/2017/01/35f0ac1a-clickclean2016-hires.pdf Accessed 26 April 2019

https://www.provenance.org/about Accessed 26 April 2019

https://www.wired.com/2016/04/nests-hub-shutdown-proves-youre-crazy-buy-internet-things/ Accessed 26 April 2019

https://www.theguardian.com/technology/2018/may/24/amazon-alexa-recorded-conversation Accessed 26 April 2019

https://securityaffairs.co/wordpress/73848/hacking/fredi-wi-fi-baby-monitor.html Accessed 26 April 2019